A pedometer measures your steps, but what if you had a similar automated device to measure your eating behavior? Evidence from nutritional studies has long shown that the speed, timing and duration of an individual’s eating behavior are strongly related to obesity and other health issues. While eating behaviors can be accurately measured in a controlled laboratory setting, a blind spot exists when researchers attempt to study how participants actually eat “in the wild.”

A new National Institutes of Health-funded project by three scientists at The University of Texas at Austin and University of Rhode Island aims to shed light on real-world eating behaviors, using AI-enabled wearable technology. The four-year, $2.4 million grant from the National Institute of Diabetes and Digestive and Kidney Diseases, supports the researchers’ plan to develop a system to detect detailed information on eating motions, potentially every bite and chew.

The researchers will combine more than 60 years of expertise in nutrition, behavioral statistics, and engineering to deploy a novel interdisciplinary project solution that would generate more complete data on study participants’ nutritional habits and behaviors.

“We’re trying to answer, can you tell when someone is eating something? That might sound like a very simple question, but it turns out it’s very hard to do if you’re not in a very controlled setting,” said Edison Thomaz, professor in the Cockrell School of Engineering’s Chandra Family Department of Electrical and Computer Engineering. “When we talk, the jawbone definitely moves, but it doesn’t move in the rhythmic way it moves when you are chewing food. Those are the kinds of hints we’re going to try to leverage with sensors and AI algorithms. We will connect the two devices and see if we can come up with a more powerful system for detecting these kinds of eating behaviors.”

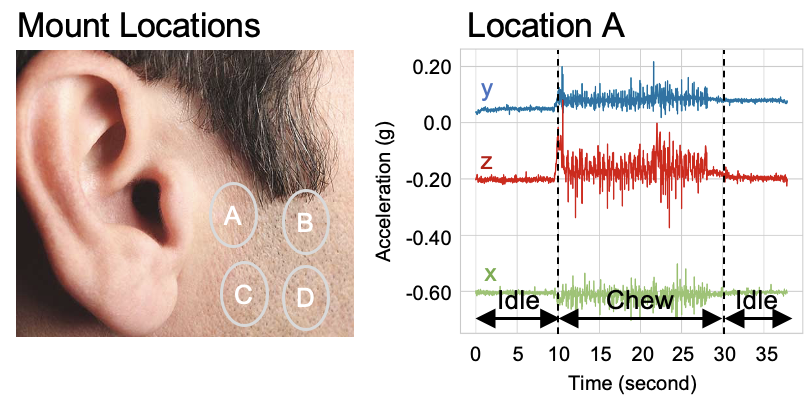

The researchers are using wearable sensors, including a button-sized sensor that sits on participants’ faces near the jawline, to measure volunteers’ personal eating behaviors, including chewing rate and intensity.

The study employs two devices: a typical smart watch and a discreet, custom-made sensor that sits on a participant’s jawline. The smart watch will capture the movement of arms and wrists when participants make typical eating gestures, measuring speed and frequency. It will be coupled with data captured by a small, button-sized sensor that will record jaw movements associated with chewing, recording the speed and intensity of the motion.

The researchers will study participants over the course of four progressive phases. After being fitted with the unobtrusive system, they will eat prescribed meals measured with standardized lab procedures and close supervision of the researchers. The next phase involves cafeteria-style eating, still under the close auspices of the researchers. The testing then moves into a restaurant setting where participants have more control over their meals. These phases will incrementally reflect more real-world eating conditions.

“The study moves through several successive experiments from an in-lab setting ‘into the wild,’ gradually moving from the internal validity of inferences in a lab-controlled setting to those grounded in the external validity of the real world," said Theodore Walls, a professor of psychology at URI whose research produces statistical tools to make sense of real-time intensive longitudinal health behavior data.

Finally, study participants return to their usual lives, but wearing the sensors to monitor their eating habits. Researchers will measure such data as the speed of eating, chewing and oral processing, how long food stays in mouth before swallowing, and how quickly the food disappears from their plates.

“With these kind of chewing and oral processing behaviors — rapid eating, taking large bites, not pausing between bites, and not chewing sufficiently — people might be over-ingesting calories before the satiety signals have time to develop,” said Kathleen Melanson, professor of nutrition at URI. “So, by assessing these behaviors, we can help develop a system that can be used in interventions to help people adapt their ingestive behaviors to maximize satiety and help with energy intake.”

The study allows researchers to add other sensors, possibly one that monitors facial skin stretching. This stage is mostly about measurement, but the ultimate goal is to continue that progression out of the lab to test real programs to help people manage their eating behavior.

Participants in the study will be those who would potentially benefit the most from it, those at risk for obesity-related harms.